42% of enterprises report that over half of their AI projects are delayed, under-performing, or failing because of data readiness issues.

Another 43% cite data readiness as a top obstacle to AI success.

If this is the state of AI play in finance, supply chain, and other traditionally structured domains, imagine the challenge for HR. Because HR data has its own brand of messiness.

Unlike finance or supply chain data, people data is riddled with subjective ratings, fragmented systems, and cultural quirks.

Is a 3 out of 5 performance rating “meets expectations” or “needs improvement”?

Does attrition include contractors?

Do you have five versions of the same employee floating around on different platforms?

Before you rush to plug AI into your HR stack, it’s worth pausing and asking, “Is our house in order?”

And that’s precisely what this blog will help you understand – a practical checklist to determine if your HR data is truly AI-ready, or if you’re just setting yourself up for smarter dashboards that deliver the same old problems.

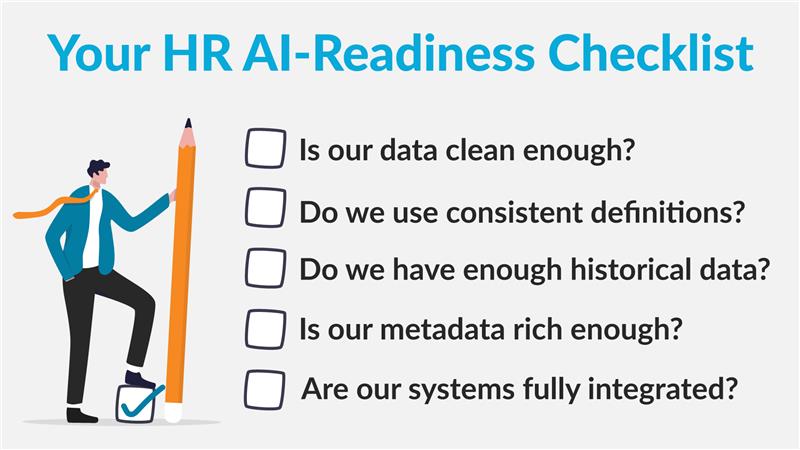

Your AI-readiness checklist for HR data

Think of this as your pre-flight safety check before you let AI loose on your workforce data. If the foundations aren’t stable, even the most sophisticated model won’t get you far.

1. Data cleanliness

Are termination reasons missing? Do you have duplicate employee IDs? Are demographic fields left blank?

If the answer is yes, your AI model is already flying blind. Dirty data reduces accuracy. But worse, it actively teaches your model the wrong lessons.

For example, if two IDs exist for the same employee, your attrition model might mistakenly log a “resignation” for one ID and an “active status” for the other, skewing your turnover rates.

2. Standardized definitions

Are you tagging exits as voluntary and involuntary correctly? What exactly qualifies as “high potential”? Every AI prediction rests on your definitions. When those definitions vary by region, manager, or system, you get chaos, not AI innovation, at scale.

For example, if one business unit defines turnover as “voluntary” while another includes “involuntary” your global attrition model may over-predict churn in one region and under-predict it in another.

3. Historical baselines

AI doesn’t learn from the last quarter. It needs two to three years of stable history to spot patterns and forecast trends.

If your organization changes job codes or reporting structures every six months, flag it early. Without consistency, the model can’t tell the difference between business evolution and random fluctuation.

For example, an AI compensation model trained on only six months of data won’t recognize cyclical bonus payouts. It may misinterpret them as anomalies.

4. Metadata tagging

Does each employee record carry attributes like job level, location, or tenure? Without metadata, your model only sees a flat file. It can surface “what” is happening, but it won’t be able to explain the “why.”

For example, a model might detect high attrition rates but, without tagging job level or tenure, it won’t reveal whether the issue is concentrated among early-career employees in a specific function.

5. System integration

Payroll, learning systems, ATS, and core HR – are they feeding into a single, trusted data layer? If not, you’ll end up with siloed AI insights that miss half the story. AI works best when it sees the full employee lifecycle, not just fragments of it.

For example, if your ATS data isn’t integrated, an AI-powered attrition tool may predict rising turnover without factoring in the surge of recent hires who naturally inflate exit rates within their first year.

The hidden pitfalls: Is your HR team overlooking them?

Even with the checklist above, there are landmines most HR teams don’t see coming. These pitfalls can quietly derail your AI initiatives, not because the models are weak, but because the data could be misleading.

1. Bias baked into history

If your past promotions favored one gender, department, or school background, AI will faithfully replicate that pattern unless you intervene. Algorithms don’t challenge history; they amplify it.

Let’s say a promotion-readiness AI project may “learn” that leadership roles skew one gender simply because that’s what your records show, not because that gender inherently makes better candidates.

What to do instead: Run regular bias audits and use fairness checks to detect skewed patterns before models go live.

2. Event blindness

AI doesn’t automatically “know” about your merger, the arrival of a new CEO, or pandemic-related furloughs unless you explicitly tag those events. Without context, it will misread your data.

For example, a spike in resignations during a post-merger restructuring may be flagged as a long-term attrition trend, when in reality it was a one-time disruption.

What to do instead: Maintain a clear “events log” that tags major organizational shifts so AI can separate noise from true patterns.

3. The illusion of accuracy

AI can make bad data look polished. Dashboards will still deliver sleek charts and predictive scores, but that doesn’t make the insight valid. In fact, a pretty interface can lull leaders into a false sense of confidence.

For example, if your data undercounts contractor exits, the model might confidently report “attrition under control,” In reality, a key workforce segment is walking out the door.

What to do instead: Validate model outputs against ground truth – frontline HR feedback, audit samples, and qualitative insights – before taking action.

The Bottom Line: Strong foundations, smarter AI

Gartner estimates that companies lose an average of $15 million every year due to poor data quality. AI projects that grow on the foundation of poor data add substantially to this waste. Costs rise, predictions mislead, and the errors look deceptively “accurate” on polished dashboards.

Preparing data should account for 60–80% of the entire effort in any AI project. Because data is the cornerstone of machine learning.

The mandate for HR leaders is clear: before you roll out that shiny new HR chatbot or predictive attrition model, get your foundations right. Clean data, consistent definitions, rich metadata, and integrated systems aren’t back-office chores. They are the bedrock of AI success.

AI will never be smarter than the data it learns from. Invest in HR data readiness today or risk building tomorrow’s people strategy on a very expensive illusion.

So, ask yourself: Is your HR data truly ready for AI? Or are you just hoping for the best?

The answer doesn’t have to be binary. Every HR team has messy data – that’s normal. The good news is that starting the analytics journey itself helps you uncover and fix these issues. Each dashboard, report, and insight you build makes your data cleaner, more consistent and more AI-ready. Remember readiness doesn’t mean waiting for “perfect” data; lean in, instead: AI readiness isn’t a finish line, it’s a continuous process that gets stronger as you move forward. So, don’t let the hurdles stop you.

Ragu Veeraraghavan

VP of Analytics, SplashBI

Ragu Veeraraghavan brings deep expertise in people analytics and workforce strategy. At SplashBI, he leads analytics innovation and customer advisory, shaping product roadmaps and cloud data architecture to help organizations gain maximum value from prebuilt KPIs, predictive models, and real-time insights. With deep expertise in the Oracle ecosystem and strategic vision in business intelligence solutions, he strengthens the platform’s ability to deliver scalable, high-impact analytics.

Ragu engages closely with HR and business leaders across industries – his perspective bridges product capabilities with the pressing needs of HR leaders navigating today’s fast-changing talent landscape.