HR teams have never reported more data than they do today. Dashboards are richer. Surveys are more frequent. Metrics are tracked with impressive discipline. And yet, something uncomfortable is happening behind the scenes. Leaders are not feeling more confident in their decisions.

According to industry research, over 70 percent of executives say they have access to more data than ever before, but fewer than half feel confident using it to make decisions. That gap is not caused by bad analytics. It is caused by too much of the wrong reporting.

More metrics have not led to better decisions. HR teams spend hours maintaining reports that look important, sound strategic, and get circulated widely, but rarely change what leaders actually do. Many of these reports exist for one simple reason. “We’ve always had them.”

In 2026, the problem is not a lack of data or tools. It is an excess of low value reporting that dilutes focus and hides the signals that matter most. Adding another dashboard will not fix that.

This blog does not introduce new metrics to track. It shows what HR teams should stop reporting and what to focus on instead if they want sharper insight and better decisions.

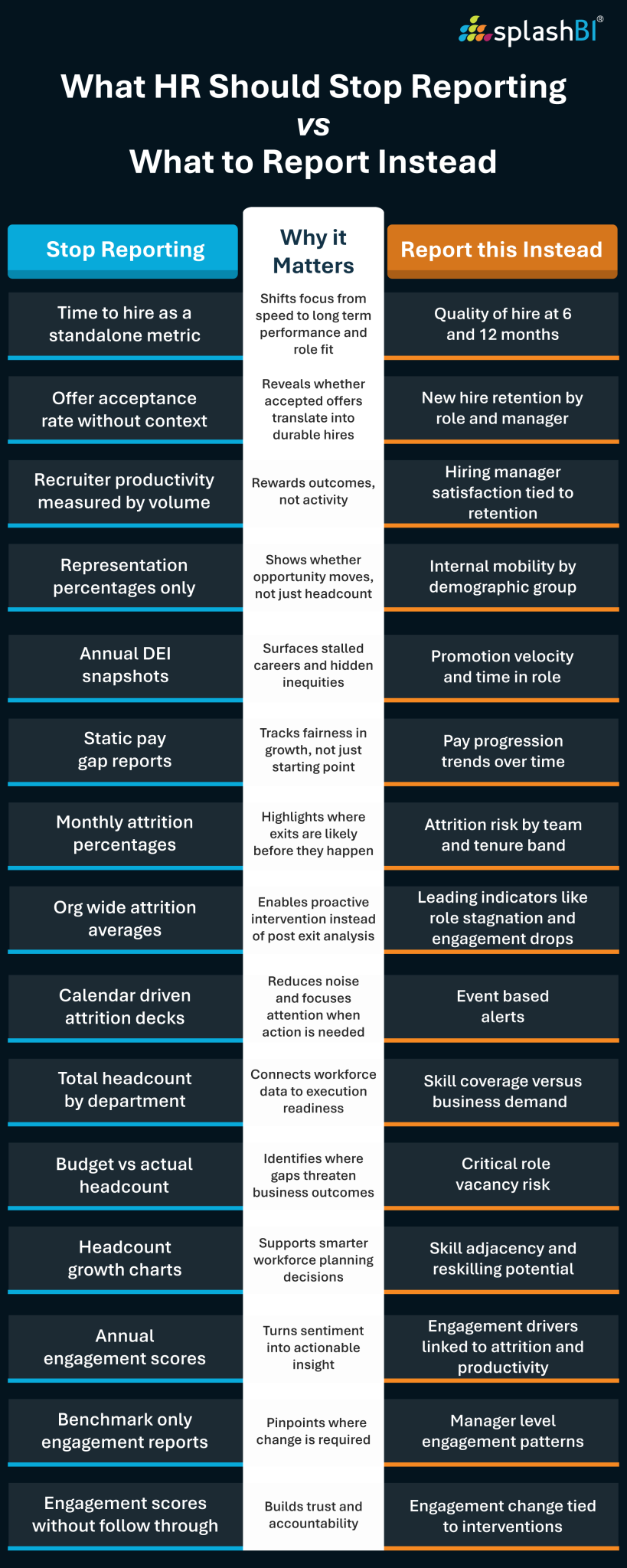

Remove reporting vanity hiring metrics that reward speed over quality

Hiring dashboards often look decisive. Roles opened. Roles closed. Time reduced. On paper, it feels like progress. In reality, many of these metrics reward motion, not outcomes.

What to stop reporting

- Time to hire as a standalone success metric

Speed alone says nothing about whether the hire worked. - Offer acceptance rate without context

A high acceptance rate can reflect compensation alignment or simply low market competition. - Recruiter productivity measured only by volume

Counting roles filled incentivizes speed over fit.

Why these metrics mislead

- Faster hiring does not equal better hiring.

- Speed hides downstream issues like early attrition, weak role alignment, or slow ramp up.

- Teams start optimizing for closing requisitions instead of building durable teams.

When speed becomes the headline metric, quality quietly becomes a lagging problem.

What to report instead

- Quality of hire indicators tied to performance reviews at 6 and 12 months

- New hire attrition within the first year by role and manager

- Hiring manager satisfaction, paired with actual retention outcomes

These metrics shift the conversation from how fast roles were filled to whether those hires delivered value.

Hiring success should be measured by impact over time, not velocity in isolation.

Stop reporting DEI that doesn’t move the needle beyond representation

Representation dashboards are often treated as the finish line. Percentages improve. Charts look balanced. Updates go out once a year. And yet, employee experience on the ground remains unchanged.

What to stop reporting

- Representation percentages without movement analysis

Who is present is only the starting point. - One dimensional diversity snapshots updated annually

Yearly views mask stagnation and slow progress. - Static charts that show who is present but not what happens next

These reports describe a moment, not a journey.

Why these metrics mislead

- Representation alone does not reflect equity or inclusion.

- It hides stagnation in promotions, pay growth, and access to leadership roles.

- It hides stagnation in promotions, pay growth, and access to leadership roles.

- Leaders end up celebrating numbers that do not materially change career outcomes.

When DEI reporting stops at headcount mix, it creates comfort, not accountability.

What to report instead

- Internal mobility rates by demographic group

- Promotion velocity and time in role comparisons

- Pay progression trends over time, not just static pay gaps

These measures surface whether opportunity is distributed equitably, not just whether diversity exists. DEI progress shows up in movement, opportunity, and growth, not just in who appears on the org chart.

Representation dashboards are often treated as the finish line. Percentages improve. Charts look balanced. Updates go out once a year. And yet, employee experience on the ground remains unchanged.

Remove monthly attrition reports that change nothing

Attrition reports are among the most widely circulated HR documents. They are also among the least acted on. Month after month, the numbers move slightly, decks get shared, and nothing meaningfully changes.

What to stop reporting

- Monthly attrition percentages sent as static decks

Regular distribution does not equal relevance. - Org wide averages without segmentation

A single number hides where the real risk lives. - Reports that describe exits but do not explain drivers

Knowing who left is not the same as knowing why.

Why these metrics mislead

- Most months show marginal change, creating reporting fatigue.

- Aggregated numbers conceal risk pockets within teams or roles.

- HR ends up reacting after exits occur instead of anticipating them.

When attrition is treated as a calendar update, it becomes a lagging narrative rather than a leading signal.

What to report instead

- Leading indicators such as role stagnation, manager changes, or sudden engagement drops

- Attrition risk by team, tenure band, and role type

- Event based alerts triggered by meaningful change, not reporting cycles

These insights prompt timely action rather than retrospective explanation.

Attrition should trigger investigation, not routine reporting.

Kill headcount reports that mask skill gaps

Headcount reports are often treated as a proxy for workforce health. Numbers go up. Capacity appears to increase. But beneath the surface, critical capabilities may still be missing.

What to stop reporting

- Total headcount by department

This shows scale, not readiness. - Budgeted versus actual headcount without skill context

Hitting targets does not mean having the right capabilities. - Growth charts that assume people are interchangeable

Roles are not equal and skills are not fungible.

Why these metrics mislead

- Headcount growth can coexist with severe skill shortages.

- It creates a false sense of capacity and confidence.

- Workforce planning decisions get delayed or misdirected as leaders assume coverage that does not exist.

When headcount becomes the headline, capability gaps remain invisible.

What to report instead

- Skill coverage versus business demand by function and role

- Critical role vacancy risk tied to business impact

- Skill adjacency and reskilling potential within teams

These views reveal whether the workforce can actually execute on strategy.

Workforce strength is about capability alignment, not just meeting numbers.

Stop engagement scores with no decision path

Engagement surveys promise insight, but too often they deliver a number without direction. Scores get shared, compared to benchmarks, and quietly archived until the next cycle.

What to stop reporting

- Annual engagement scores presented without actionability

A score alone does not tell leaders what to change. - Benchmark driven scores with no internal context

External comparisons rarely explain internal realities. - Scores disconnected from retention or performance

Engagement becomes an abstract sentiment, not a business signal.

Why these metrics mislead

- Leaders acknowledge the score and move on.

- HR becomes a reporting function instead of a strategic partner.

- Employees see surveys without visible follow through, eroding trust.

When engagement data lacks a decision path, it becomes performative rather than practical.

What to report instead

- Engagement drivers correlated with attrition and productivity

- Manager level patterns instead of company wide averages

- Change over time tied directly to specific interventions

These insights point to clear actions rather than vague interpretations.

Engagement data should answer what to fix, where, and why.

Conclusion: Report less and see more with conversational HR analytics

The future of HR reporting is not about adding more dashboards or tracking more metrics. It is about reporting less and seeing more. The teams that will move fastest in 2026 are not the ones buried under charts. They are the ones who have better conversations with their data.

Conversational HR reporting changes the role of reporting and analytics entirely. Instead of scanning static charts, leaders can ask why something is happening, explore patterns in real time, and drill into details the moment a question arises.

Curiosity replaces compliance.

Insight replaces reporting rituals.

This shift turns HR analytics into a decision engine rather than a reporting obligation. Data stops being something HR prepares for others and becomes something leaders actively use. The focus moves from explaining the past to shaping what happens next.

Platforms like SplashBI enable this transition by helping HR teams move from scheduled reports to insight on demand. When leaders can ask questions in plain language and explore data live, the right metrics surface naturally without forcing them onto a dashboard. Click here to book a demo.

Because in 2026, the most mature HR teams will not be the ones reporting the most metrics. They will be the ones brave enough to stop reporting metrics that don’t bring meaningful value.